Creating Persona Chatbots (with a focus on evaluation)

Course project for CS263A: Natural Language Processing

Focused on different aspects of persona injection by employing prompt tuning techniques, specifically in-context and chain-of-thought methods, to generate open-ended dialogues that closely align with the assigned persona. Performance of the model (Mistral 7B baseline) was evaluated using a robust framework that included metrics such as LLMEval for fluency and coherency and MMLU for reasoning ability. Additionally, toxicity scores (HDS) were also evaluated to further enhance the performance.

Results

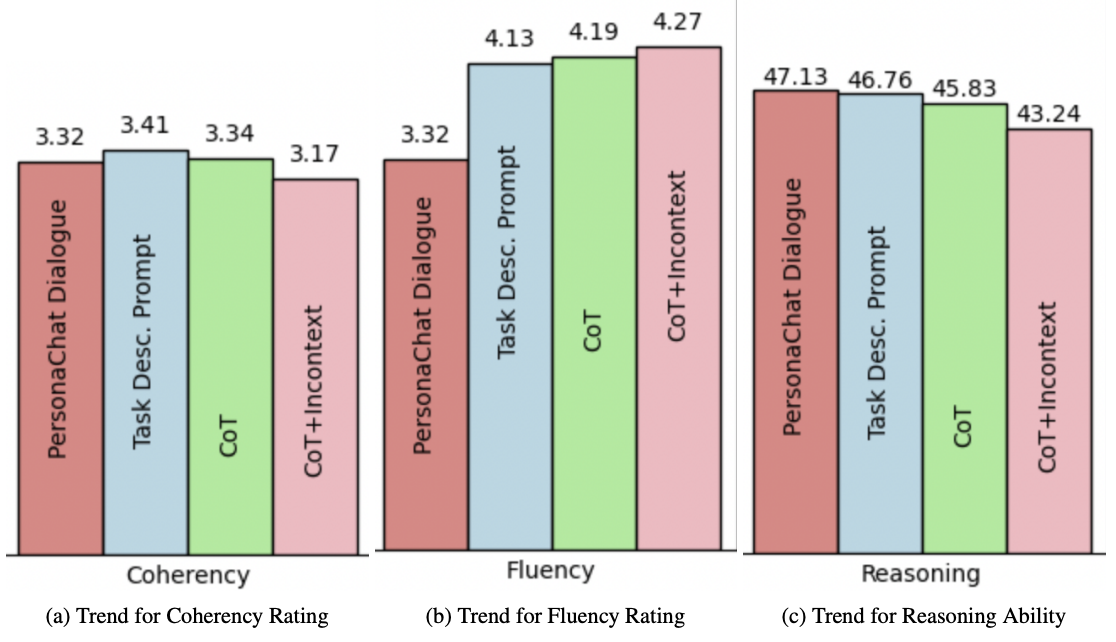

- Fluency & Coherency: Achieved higher fluency and coherency than human-generated dialogues, with GPTEval scores: Mistral (3.18), GPT-3.5 (3.32), GPT-4 (3.32), Llama 3 (3.79), Phi (3.95).

- Reasoning Ability (MMLU Score): Decreased with improved persona alignment, with baseline: 47.13%, CoT: 45.83%, CoT + In-context: 43.24%.

- Perplexity Score: 13.9 for Mistral-7B, indicating slightly lower fluency due to dataset inconsistencies.

- Toxicity Score: GPT-2: 0.029 ± 0.0066, Our model: 0.104 ± 0.047, showing increased but controlled toxicity.

- Honesty & Bias (HDS Score): GPT-2: 0.076, Our model: 0.0258, indicating reduced harmful content variance across demographic groups.

Follow "this repository" for more details.

Figure a represents Trend for coherency score for GPT4Eval. Figure b represents the same for fluency scores.

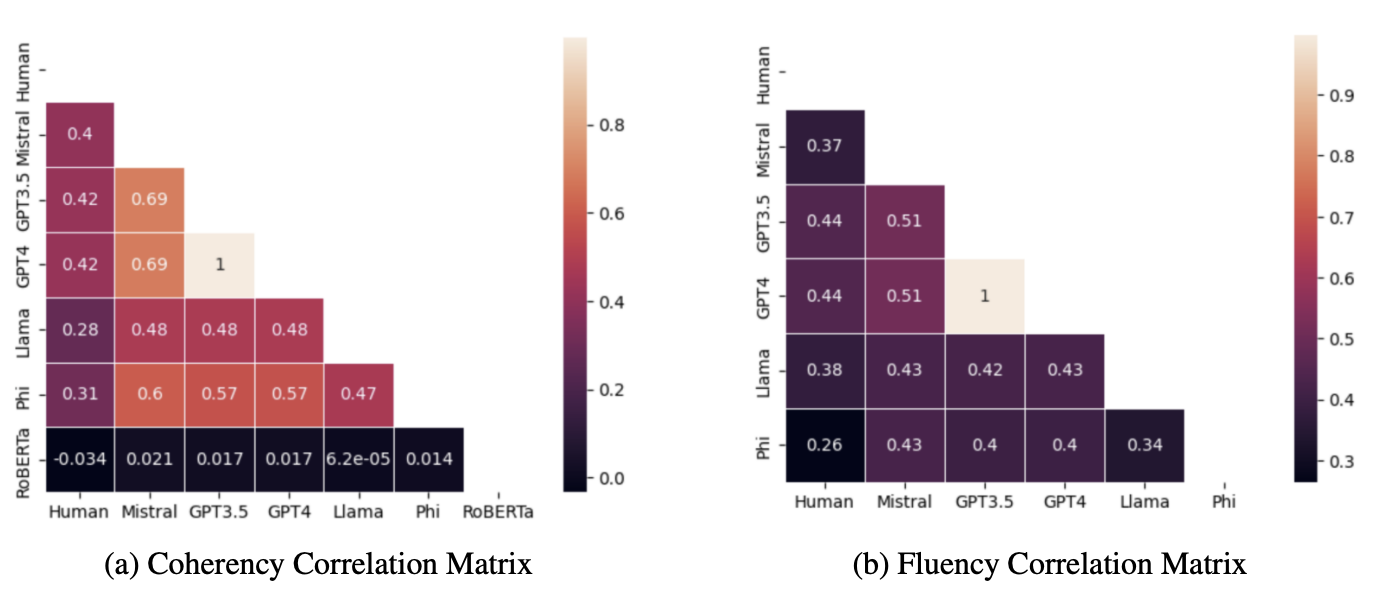

Figure (a) represents Correlation matrix coherency score for LLMEval for different models and human eval. Figure (b) represents Correlation matrix for fluency scores across models and human. Lighter colors represent higher correlation scores, which are desirable

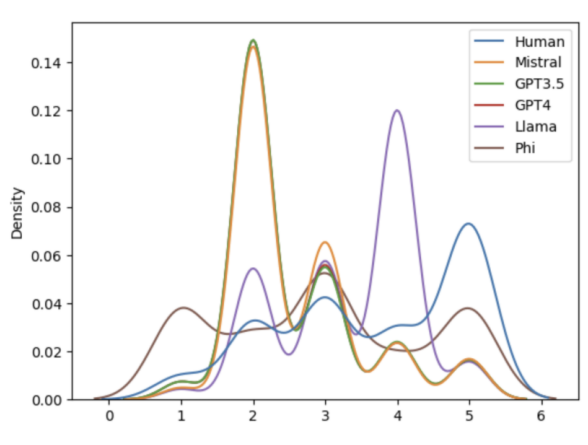

Kernel density estimate plots of score distribution for coherency across diffferent models and humans on coherency